- BEST TO USE NAS RO SAN FOR VM ESXI 6.5 HOW TO

- BEST TO USE NAS RO SAN FOR VM ESXI 6.5 ARCHIVE

- BEST TO USE NAS RO SAN FOR VM ESXI 6.5 PSP

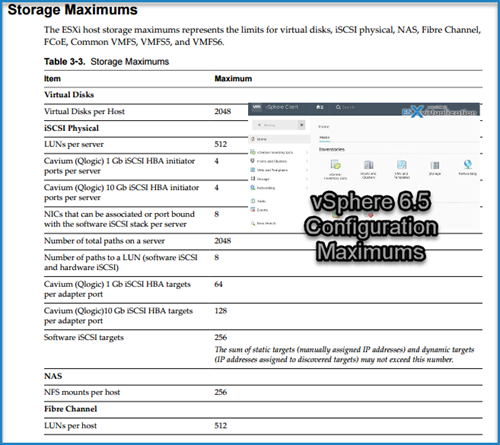

So we'd be looking at moving away from the traditional storage and compute separate to having them converged. This will eliminate any latency associated with having to access your drives over the network. You could use StarWind's Virtual SAN or VMware's VSAN to leverage the local storage on the host/s. How many LUNs per targets should there be? Should I try a managed switch with LACP etc?Īn old (and slightly out of date read) worth reading though is this. That's assuming your not doing ANY random IO and have a 100% pure sequential workload. The specs say they have sustained transfer rate of 192MB/s. The drives in the NAS are Synology certified HGST 4TB drives.

BEST TO USE NAS RO SAN FOR VM ESXI 6.5 PSP

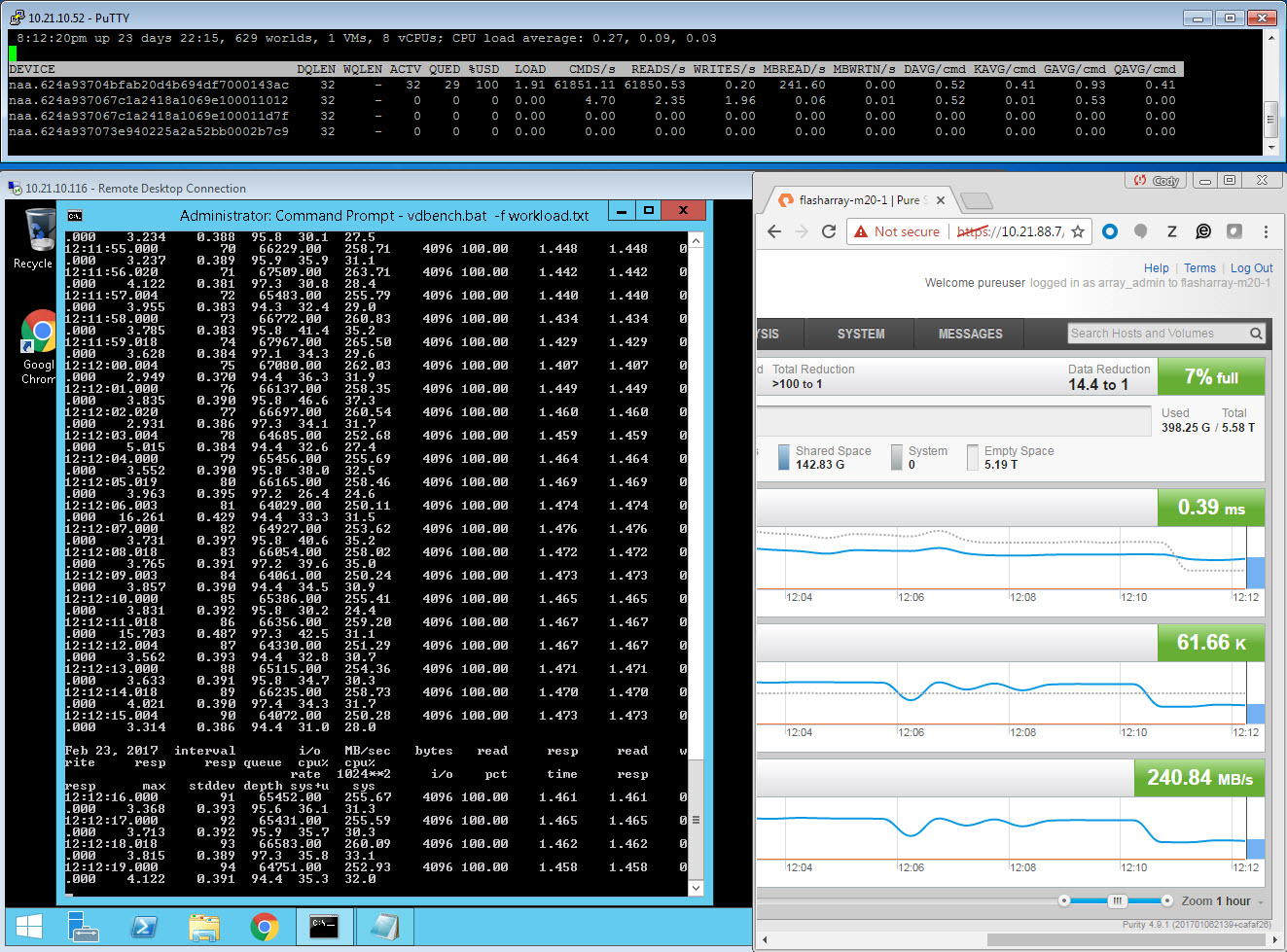

I would note that they only certified Most Recently Used Path (MRU) for their PSP so trying to use more than one link may prove problematic. Its the incredibly low IOPS of those drives is a bigger concern. Throughput is not likely going to be your bottleneck. Now I have several questions: What should be average realistic and acceptable transfer rate between host and NAS for this setup? What's the best practice for this sort of setup? You are testing throughput (A useless metric for virtual machines) and ignoring random IO (what the IO blender that is virutalization produces). ISCSI doesn't work with LACP unless you are opening multiple sessions (Hint, vSphere does not support this) per LUN. My users would murder me if I deployed something like this.

BEST TO USE NAS RO SAN FOR VM ESXI 6.5 ARCHIVE

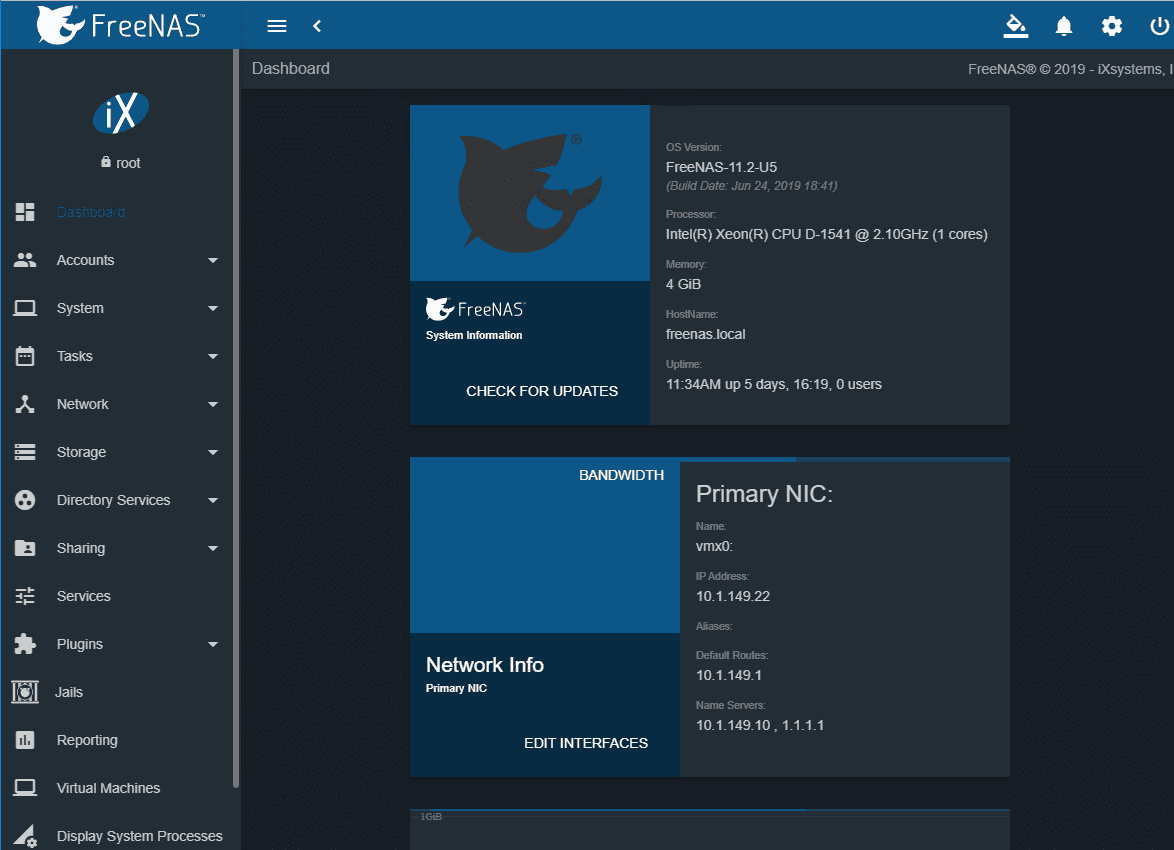

Unless this is a "cold" archive your going to hate your performance unless you put some SSD caching on that and even then its still not going to be that good. Thank you for your time and sorry if something sounds stupid or incomplete! Or a link to an article that details everything. How many LUNs per targets should there be? Should I try a managed switch with LACP etc?Īny help, recommendation or comment that could educate me more on best practices on iSCSI with Vmware would be super helpful. Now I have several questions: What should be average realistic and acceptable transfer rate between host and NAS for this setup? What's the best practice for this sort of setup? I am using just one big LUN. I have tested both the setups several times restating both host and the NAS. Now I am getting 95MB/s with sometimes peaking up to 115. Then I tried binding the 3 NICs on the NAS together under one IP (which it says is 3000Mbps) and left the host side as it was. Service status on the NAS shows 9 sessions, 3 from each host NIC. Multiple sessions are enabled on NAS and all NICs are on the same subnet/broadcast domain. The other two are not transceiving anything. But I see it is using only one of the 3 NICs on NAS, not the other two. It varies in between 66 and 80 but mostly stays on the higher side. Now when testing I am getting up to 80MB/s. The Gigabit switch is dumb so there is no LACP/Dynamic Link Aggregation though Jumbo frames are set up on both ends, all NICs. So I followed everything on the VMWARE KB and set up port binding etc.

BEST TO USE NAS RO SAN FOR VM ESXI 6.5 HOW TO

I wasn't aware of how to setup iSCSI and port binding. Initially I thought I could bind/team the 3 NICs on NAS to form a big fat 3Gbps pipe and do the same on the host. One port on the NAS for management, the other 3 for iSCSI. The idea is to simply connect host and NAS directly with a switch since the server has 8 NIC ports and the NAS comes with 4, all Gbps.

With RAID 10 mirroring, I am hoping to see something around 200MB/s between the NAS and the host. Currently I have put 6x4TB RAW (11TB at RAID10). The 8bay NAS is extendable to 18 drives with an expansion unit making it future proof. Budget is limited so no fancy SAN stuff would get approved. The current requirement is to be able to support up to 25TB (which would be good for next 3 years) that's why we are sticking with Synology for now. The host server itself has 12x3TB RAW hard drive space (16.4TB at RAID10). I am setting up an ESXI host with a Synology NAS.

0 kommentar(er)

0 kommentar(er)